Note

While looking in an old folder, I found this essay I wrote about the World Wide Web for a school project (somewhere around 1994 or 1995). I figured it might interest someone, so why not put it up?

At the end of World War II, it might have seemed that science could do anything to enhance the physical powers of man. Advanced detectors could outperform human senses, automated relays could react more quickly than the best reflexes, and the awesome power of nuclear weaponry extended man's destructive capabilities. The one constant factor that technology did not seem to have changed much was the power of the human mind. Yet in a somewhat cryptic work of prophesy entitled As We May Think, Dr. Vannevar Bush (science advisor to President Roosevelt during WWII) suggested that it was time to switch scientific efforts in warfare over to the task of expanding man's mental arsenal.

As We May Think appeared as an article published in The Atlantic Monthly in 1945. In the article, Dr. Bush proposed a device called a "memex," which would be a "file and library" that is "mechanized so that it may be consulted with exceeding speed and flexibility." [1] In addition, this device would provide the

capability for associative indexing, whereby "any item may be caused at will to select immediately and automatically another." This was the critical feature of the memex, which would serve as an "enlarged intimate supplement" to the memory of its user. By providing an arbitrary method for connecting fragments of information, the memex would mimic the tangential nature of human thought. If properly developed, the memex

could give man command over the inherited knowledge of the ages.

Video terminals and word processors had not been invented in 1945, so Bush phrased the memex in terms of analog technologies rather than considering the fledgling computers that existed at the time. The device he proposed was something like a microfilm browsing machine, which had levers as inputs, and a completely mechanical process of association and document retrieval. Despite this failure to predict advances in digital document technology, As We May Think goes on to describe someone performing a research task using a memex, and the procedure sounds remarkably like traversing the network of information that exists on the World Wide Web today. So nearly fifty years later, it would seem that we have finally come to the point where the "memex" concept has reached full bloom.

We could tacitly assume that scientists have been working on solving the technical barricades to Bush's imperative for the past fifty years--and it was this concentrated effort to achieve his vision that brought about the Web. In fact, it is possible to selectively construct a progressive sequence of developments in non-linear media interspersed between As We May Think and the World Wide Web . Yet studying the primary sources, one finds that this treatment gives an incomplete picture of the elements that contributed to the Web's development. This paper attempts to present a more comprehensive view of the social factors, lost knowledge, and government policy that guided technology through a wayward path--which unintentionally became the core of the WWW.

Non-linear media

There are two major trains of development that brought about the existence of the Web. One such train is the advancement of non-linear media, which has generally been grounded in the ideology and rhetoric of using computers to enhance the process of accessing stored knowledge. The common terms used in the field today ("hypermedia" and "hypertext") were coined in 1965 by a man named Ted Nelson, whose constant evangelism of using computers to produce non-linear documents has earned him a reputation as the "father of hypertext."

Nelson's primary background was in media and publishing. By the time he was out of college he had published books, newspapers, and a long playing record. When he saw computers in 1960, he imagined their potential for creating new possibilities in media, and saw that "the future of humanity is at the interactive computer screen." [2] Nelson realized that sequential writing, which had been in place for centuries, was a carryover from spoken language--which is necessarily ordered. Also, he observed that books on paper aren't suited to reading except in a sequence. In his opinion, this caused an inherent conflict with the act of writing down ideas, because "the structures of ideas are not sequential; they tie together every which-way." [3]

For Nelson, writing was the act of forcing non-sequential ideas into a sequence. If a machine could be employed to create a form of literature that could be navigated in more flexible ways, this would allow for a new kind of writing that he called "hypertext". Another somewhat fundamental concept that Nelson is credited with is the realization that whether something is text, pictures, sound, or a movie...it is still an element of information. Theoretically, a machine could be made to fetch and index any one of these media forms in a consistent and integrated manner--he called this concept "hypermedia".

In his book Literary Machines, Nelson outlined a plan for a project he called "Xanadu", whose name was taken from a poem. He interpreted the word as meaning "that magic place of literary memory where nothing is [ever] lost." Conceived in 1967, the Xanadu idea was to sell franchises in something like a library or a video store,

where artists and authors would "publish" their work electronically, thus making it retrievable from any other Xanadu location worldwide. In addition, any document in the Xanadu system could have associative references to any other document. This idea came straight out of the memex concept of Vannevar Bush, and the influence is clearly acknowledged...as Nelson included the entire text of As We May Think in the book. [3]

Because of his background in media, Nelson thought thoroughly about copyrights and royalties. Putting documents into the Xanadu system cost money, but whenever anyone else browsed through those documents at another Xanadu franchise (possibly on the other side of the world), the corresponding royalty charge would be incurred. He and his team developed a number of very complex algorithms to make sure that documents were stored redundantly to protect against catastrophes, and also to migrate documents that were used more frequently onto storage devices with a faster access time.

Nelson spent his entire life working on Xanadu--but despite his top billing in the hypertext world, the project has not been realized. However, another individual who was also inspired by Vannevar Bush's article actually made a number of working implementations of software which exceeded Nelson's produced goods, and perhaps his vision as well. This was Douglas Engelbart, who is credited with the invention of the computer mouse, the word processor, and the graphical point-and-click interface (all are certainly an integral

part of the World Wide Web today). Lesser known is the fact that he also made a network-based associative system of shared hypertext document storage and selection, independently of Nelson's work.

Engelbart was drafted into the military in WWII, and as an engineer he was assigned to work on RADAR. While working on the RADAR systems, Engelbart came to realize that the screens could be used to display images, pictures, and words. Although he had never seen a computer at the time, he made the connection that a computer could be used to control these screens. Having embraced Vannevar Bush's ideals after reading As We May Think in

1945, Engelbart had the vision that such computer-based tools could augment human intellect. Once he was out of the military, he decided that he would get a job wherever he could obtain access to computers, so he went to work on projects at the Stanford Research Institute (SRI) in 1957.

At the Stanford Research Institute, they were interested in conducting research into scientific, military, and commercial applications of computers. While interviewing for the job, Engelbart described his ideas of "getting computers to interact with people, in order to augment their intellect." The interviewer asked him how many people he had told about this idea, and Engelbart said he had told no one. In response, the interviewer said "Good. Now don't tell anybody else. It will sound too crazy. It will prejudice people against you." [5] Despite Vannevar Bush's widely read suggestions about using machines for increasing man's command over scientific knowledge, the computing community had not conceived of the huge impersonal computers of the time as interacting with a wide range of scientists--much less the general public!

Engelbart was the first to conceive of human-computer interaction as communication. The upshot of this was that in order for that communication to be effective, computer tools had to have a convenient interface to the user. In order to achieve this vision, Doug eventually requested the establishment of an "Augmentation Lab" at SRI. Despite some degree of friction, his request was granted--and during the 50's and 60's he developed the mouse and many of the features that are found in all GUI's today (such as windows, icons, and mouse pointers). Then, in 1963, he set out the conceptual framework for an interactive hypermedia system in a paper entitled A Conceptual Framework for the Augmentation of Man's Intellect. He didn't use the term "hypermedia" in his

proposal, of course--because Nelson didn't coin the term until 1965! Engelbart was conceiving his system independently, and some histories consider him to be the true "inventor" of hypertext.

Engelbart's project was called "NLS", which stood for "oN Line System". It contained integrated help, electronic mail, teleconferencing, and interactive hypermedia. To him, hypertext was quite important--it would allow users to expand the information available to them and facilitate collaborative authoring. In fact, scientific journals in NLS could have references that linked to other journals through only a point and click. There were many other fascinating capabilities that would today be labeled "groupware" features--such as annotations, shared screen telephoning to enhance real-time, and one-to-one communications. In addition, NLS was based on a computer network, which directly maps to the nature of the Web.

In 1968, NLS was complete, and was publicly demonstrated to the computing community. Although NLS met many needs of the present day, surprisingly little of Engelbart's work was carried over into the world of personal computing at the time. Xerox PARC and the developers of the Macintosh operating system borrowed pieces of the project--taking icons, windows, and mice. Yet the shared hypermedia portion of NLS (and its equally important

groupware implications for communicating and sharing information) were not adopted. So although the World Wide Web has implicitly inherited the work of Engelbart in terms of a graphical point-and-click interface, it was not directly influenced by the hypermedia features of NLS.

From the evangelism of Nelson and the work of Engelbart came the rise of several computer products based on hypertext technology. For many of the current generation of computer users, these products have defined the paradigm--as few have seen NLS, and even fewer have seen what fragments of Xanadu exist. A very successful hypertext authoring tool called "HyperCard" was released by Apple in 1987, and the name shows the influence of Nelson's terminology. A similar product called Toolbook was released for the IBM sometime later--and hypertext based help systems have found their way into almost every computer product that has been released since.

Creation of the Internet

Now that we have briefly covered the evolution of hypermedia, we can examine the track of developments that had to do with the creation of the Internet--the other key technology that makes the World Wide Web possible. The Internet was not created to be a backbone for a distributed hypertext document system, although that is eventually exactly what it became. The Internet was actually created as a research project by the military.

In the 1960's, the RAND Corporation (America's foremost Cold War think-tank) contemplated somewhat of a grim problem: How could the military bases in the United States maintain communications after a nuclear war? The existing telephone and radio systems were centrally controlled--so no matter how thoroughly the crucial points were armored, they would always be vulnerable to atomic bombs. Paul Baran at RAND made a proposal for a solution which was made public in 1964--that a distributed network should be created in which all nodes in the network would be equal in status to all other nodes. Each node would have the capability to originate, pass, and receive messages from its neighbors. Then, each message would adaptively walk its way through the network on an individual basis. This led to a less efficient use of resources than in the telephone system, but the network would still be able to transmit between the remaining nodes after losing arbitrary links.

Experiments in this distributed system were first investigated in Britain, and a prototype was implemented on a grander scale in the United States. By December 1969, there were four nodes on the infant network, which was named ARPANET (after its Pentagon sponsor, the Advanced Research Projects Agency). The four computers could transfer data on dedicated high-speed transmission lines, and new nodes could be added at will. The combined computing power of the network could then be utilized by scientists--which was a valuable objective because computing time was still a precious resource in the early 70's. Thanks to ARPANET, scientists and researchers could share one another's computer facilities by long-distance.

By the second year of operation it became clear that ARPANET's users had different ideas about how the network should be used. Rather than time-sharing the CPU's, the scientists involved with the project had turned it into a "dedicated, high-speed, federally subsidized electronic post-office." [6] Researchers were using ARPANET to collaborate on projects, to trade notes on work, and eventually just to chat. News and personal messages became the main traffic of the ARPANET, much to the chagrin of the administrators of the system. This use of the network couldn't be stopped--people were simply more enthusiastic about the rapid communication potential that it afforded than anything else. As the social role of the network became defined, its original function as a distributed post-nuclear emergency communications medium was forgotten.

Fantasy and science fiction fans comprised a large portion of the growing network population, and in 1979 the first Multi-User Dungeon (MUD) was created at the University of Essex. It was an on-line Dungeons and Dragons game that several people could play simultaneously, and such innovations introduced a wide audience to the kinds of communications possibilities the Internet allowed. In 1986, the NSF established supercomputing centers at Princeton, Pittsburgh, UCSD, UIUC, and Cornell--causing an explosion in the number of Internet connections from universities. As college students became the core users of the Internet, they produced a large quantity of freely available "social" software. In 1988 the Internet Relay Chat (IRC) was developed by Jarkko Oikarinen, which still thrives today as a kind of "party line" for thousands of simultaneous users on the Internet.

Significant collaborative hypermedia developments were absent during this time, although the technology that existed was far beyond what would be required to build a distributed hypermedia tool. In fact, only the most rudimentary data transfer protocols existed during the 70's and 80's, which required that users knew exactly which node on the Internet had the data they were looking for. It was not until 1990 that the first tool for trying to control the massive amount of information that was accruing on the Internet was released. The program was called "Archie," and it was created by Peter Deutsch, Alan Emtage, and Bill Heelan at McGill. Given the name of a particular data file, it would automatically search through Internet sites--attempting to find the proper location.

The idea of creating a single entry point for finding data on the Internet caught on, and Archie's work was taken farther when Wide Area Information Servers ("WAIS") were invented in 1991 by Brewster Kahle. That year also saw the introduction of a system called "Gopher" by Paul Lindner and Mark P. McCahill from the University of Minnesota. Both tools were based on servers that could be searched to provide information, but Gopher established a document hierarchy that could be browsed using specialized client software. Although it wasn't a hypertext system per se, Gopher made no restrictions on what an individual data element had to be. With the right browser, Gopher could do everything from display pictures to performing phone book searches. Yet it was not until the release of the World Wide Web that true hypermedia came into widespread support on the net (although the standards were carefully crafted to support interfaces to existing services like Gopher).

The World Wide Web

The World Wide Web itself was designed by Tim Berners-Lee, who was working at CERN (the European Particle Physics Laboratory in Geneva, Switzerland). Tim had graduated from Queen's College at Oxford University with first class Honors in Physics, and around 1980 he spent six months as an independent consultant software engineer at CERN. While he was there, he wrote (for his own private use) a program that stored information using random associations. He called the project "Enquire"--and although it was never published, it formed Tim's conceptual basis for the World Wide Web. Tim's background was in Physics, and he had never read any of Ted Nelson's books or seen Doug Engelbart's NLS. Yet Nelson's thinking was so much "in the air" that the terminology and basics of hypertext (if not its ideology and rhetoric) had filtered through to Tim, and so he

used the term "hypertext" when describing his work.

In 1984, Berners-Lee took up a fellowship at CERN, to work on distributed real-time systems for scientific data acquisition and system control. Realizing that CERN had a large amount of data that was being gathered on computers throughout its network, Tim decided that there should be an easy way for anyone at the facility to access the information stored on any other machine. In 1989, he proposed a hypertext project, which

he dubbed the "WorldWideWeb." Based on the earlier "Enquire" project, it was designed to allow people to work together by combining their knowledge in a web of hypertext documents. These documents were written in a simple "markup language" called HTML. Tim's team at CERN wrote the first World Wide Web server and the first

client--a hypertext browser and editor which ran in the NeXTStep environment. This work was started in October 1990, and the program was first made available in December. In 1992 the WWW was released to the general public.

Note

Interestingly, I was actually doing a little bit with the World Wide Web in 1992.

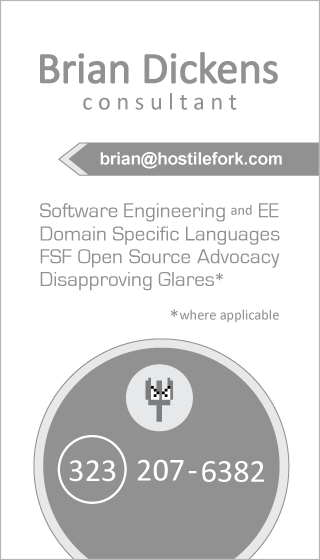

Nahum D. Gershon, William Ruh, Brian Dickens, Joshua LeVasseur: Information Highways: Internet and Web Services. CODATA 1994: 3-12

Between 1991 and 1993, the World Wide Web saw some moderate growth as certain sites adopted the free software and used it. But in June of 1993, a program called "Mosaic 1.0 for X-Windows" was released by the National Center for Supercomputing Applications (NCSA). This opened up the possibility for any machine running X-Windows to browse World Wide Web sites. Since X-Windows was the prominent platform of scientific machines on the Internet at the time, the Web began to explode like wildfire--attracting attention in many popular science journals.

After the release of Mosaic, the WWW proliferated at a 341,634% annual growth rate of service traffic. Gopher, on the other hand, only grew by 997%. By 1994 it was possible to order pizza from Pizza Hut on the Web, and in 1995 the World Wide Web surpassed FTP in the NSF's usage benchmarks (packet count and byte count) to become the most popular service on the Internet. Today, businesses have embraced the web fully, and even pay to place advertisements on some of the more popular pages.

The phenomenal success of the World Wide Web in the 90's cannot be explained by technology alone. If technological ability were the sole determining factor, then a system like the World Wide Web could have happened much sooner. Doug Engelbart had worked out most all of the details in 1968, but when he held his system before the community they did not embrace it--even though it provided all the features of the early Web, if not more! Obviously, there were contributing social factors which influenced when and where such a technology could be adopted, and these happened to be favorable for the World Wide Web.

By arriving at a later time when the Internet had been fully developed as a social communications medium, the World Wide Web exploited the fact that there were a large number of people who were now comfortable with using the computer as a communications device. It was these people who felt free to set up their own personal "home

pages," telling others about themselves and providing links of information to their friends. The familiarity required to provide such an acceptance came only after years of E-mail, multi-user dungeons, and other innovations. Also, the Internet had grown phenomenally by the time of the WWW, so people could perhaps more readily comprehend the capabilities of the system--rather than merely having to extrapolate the rewards.

The Web's main structural logic was to "not impose standards on hardware or software but rather on the data, to offer maximum portability." [8] Indeed, the rapid proliferation of cross-platform web clients like Mosaic was a critical piece of the project's success. Ted Nelson has acknowledged the Web's significance, but calls it

"a simplified version of Xanadu"--and insists that the anarchy, instability, and lack of regard for copyrights and royalties are going to ultimately be the Web's downfall.

There is some truth to what Nelson says--technologically, the Web is in many ways inferior to its theoretical predecessors. In the original proposal written by Tim Berners-Lee to design the WWW client and server software, he made the modest request for just five software engineers and 6 months of time to complete the project. [8] The pure simplicity of the Web's design allowed it to be implemented rapidly, but it has quickly spiraled into an uncontrollable mess that search and index firms are making a fortune from sorting out. It is interesting to note that Nelson himself could have easily created a system like the World Wide Web rather than one like Xanadu. Yet he overestimated the technological demands that society would place on such a product. By not understanding the guiding social imperatives, he missed out on a phenomenal opportunity.

Conclusion

In conclusion, the Web was an outgrowth of developments in hypermedia, but owed a great deal to the social climate that coalesced around the Internet. The interrelationship between technological change and social change is evident from this study. It is also interesting to consider whether or not hypermedia has reached the original idealism of expanding human mental powers. For those who have discovered the web as a research tool or a handy reference, they know that it can save hours of time that might have been spent looking up obscure information. Yet few authors have actually tried to exploit nonlinear composition in the way that Ted Nelson originally thought--authors are still writing linear fiction, and hypermedia is only exploited in a few interactive video games. Still, the Web is growing exponentially--and if the hyperdocument comes to replace the linear document, authors might find themselves under a social imperative to begin writing all

their works in hypertext.

Bibliography

- [1] Bush, Vannevar. As We May Think. The Atlantic Monthly. July 1945.

- [2] Nelson, Ted. Computer Lib. Microsoft Press, Redmond, Washington, 1987.

- [3] Nelson, Ted. Literary Machines. Mindful Press, South Michigan, 1987.

- [4] Zakon, Robert. Internet Timeline v2.2. The MITRE Corporation, 1995.

- [5] Eklund, Jon. Transcript of a Video History Interview with Mr. Doug Engelbart.

National Museum of American History, Smithsonian Institution, May 1994. - [6] Sterling, Bruce. Short History of the Internet. The Magazine of Fantasy and

Science Fiction. F&SF, Cornwall, CT, February 1993. - [7] Rheingold, Howard. Tools For Thought: The People and Ideas of the Next Computer

Simon & Schuster, 1985.Revolution. - [8] Berners-Lee, Tim. WorldWideWeb: Proposal for a HyperText Project. CERN Proposal,

1989.